Nobody believes that every AI project succeeds. Just ask MD Anderson. Anderson blew $60 million on a Watson project before pulling the plug.

That project was a clown show. A report published by University of Texas auditors found that project leadership:

- Did not use proper contracting and procurement procedures

- Failed to follow IT Governance processes for project approval

- Did not effectively monitor vendor contract delivery

- Overspent pledged donor funds by $12 million

IT personnel working on the project hesitated to report exceptions because the project leader’s husband was MD Anderson’s President. Project scope grew like kudzu. MD Anderson executed 15 contracts and amendments in a series of incremental expansions. The budget for many of these was just below the threshold for Board approval, which suggests deliberate structuring to avoid scrutiny.

Interestingly, the massive expansion in project scope coincided with a $50 million pledge from “billionaire party boy” Low Taek Jho. (Jho recently cut a deal with the US government to avoid prosecution on charges related to the 1MDB scandal.)

So it’s not news that some AI projects fail.

Last week, Fast Company published this piece with the clickbait title of Why AI is Failing Business. The authors, an economist and the two co-founders of a tiny startup, want you to believe that failure is the norm for AI projects.

The article exemplifies a genre I call Everyone is Stupid Except Us. Practitioners of this approach paint a dire picture of current practices. The implicit message is that they have a magic bean that will set things straight.

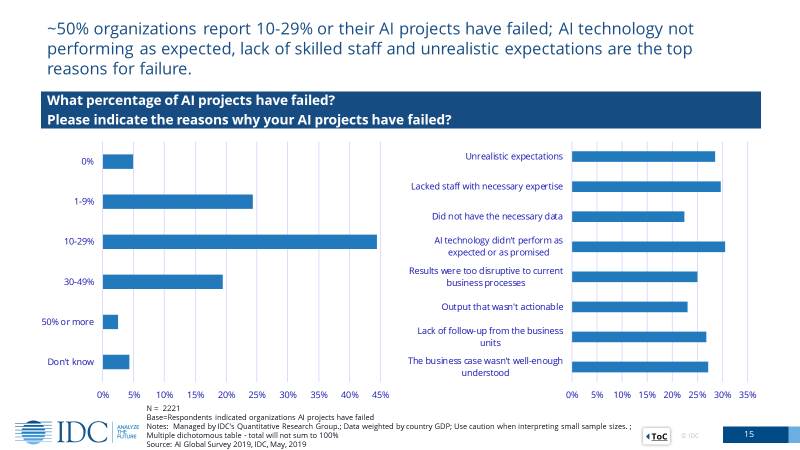

Citing an IDC report, the authors write that “most organizations reported failures among their AI projects, with a quarter of them reporting up to a 50% failure rate.”

Wow. Fifty fucking percent.

That number sounds fishy, so I pulled the report and checked with the author. Here’s the pertinent page:

The first part of the authors’ claim is correct. About 92% of the organizations surveyed by IDC reported one or more AI project failures.

The rest is misconstrued. About 2% of respondents reported failure rates as high as 50%. 21% reported a failure rate of more than 30%.

Most respondents report a failure rate below 30%.

In an ideal world, no AI project would fail. But put that failure rate in context. According to a report from the Project Management Institute, only about 70% of all projects completed in 2017 met original goals and business intent.

In other words, AI projects are no more or less likely to fail than any other IT project.

The authors of the Fast Company piece bloviate for another 11 paragraphs about why AI projects fail. They could have just shifted their eyeballs to the right on the page they misquote, where IDC tabulates the reasons for AI project failure. The top five cited by respondents are, in descending order:

- AI technology didn’t perform as expected or as promised

- Lacked staff with the necessary expertise

- Unrealistic expectations

- The business case wasn’t well enough understood

- Lack of follow-up from the business units

That first reason needs unpacking. Projects rarely fail because technology does not do what it is supposed to do. Projects fail because the buyer wants something the technology isn’t designed to deliver, or the organization cuts corners on implementation. In most cases, the customer and vendor share responsibility for that failure. The vendor may make misleading or exaggerated claims, the customer may fail to define requirements, or the customer may not perform the necessary due diligence.

It’s easier to blame the technology, though.

AI projects are the same as ERP projects or any other IT project. They succeed or fail based on the organization’s project management processes.

Next time you’re at a trade show when some AI vendor starts braying about their magic bean, do yourself a favor. Move on to the next booth.

Leave a comment